What it means to be an AI full-stack company: Beyond just code

We talk about AI changing how we code. But it's also changing how we understand what to build.

Everyone's using AI to write code now. GitHub Copilot, Cursor, Claude—the tooling is everywhere. But that's only scratching the surface.

What happens when you use AI agents not just for implementation, but for the entire product lifecycle? Market research. User intent analysis. GTM strategy. Content generation.

We ran an experiment to find out.

The experiment: Building a product end-to-end with AI

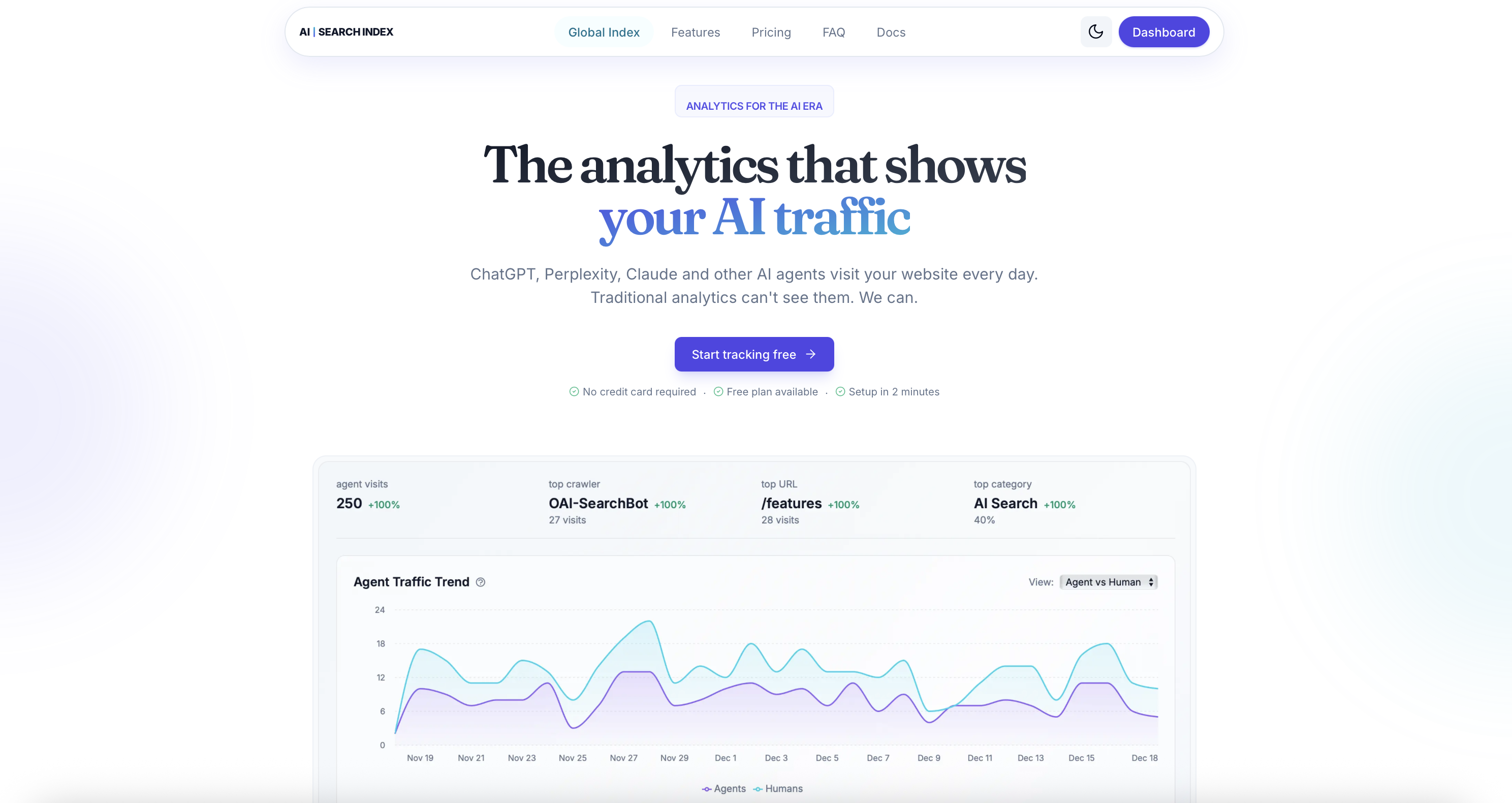

We launched AI Search Index—a spin-off from Superlines' AI bot analytics feature—as a standalone product. The goal wasn't just to ship fast. It was to test whether AI agents and MCP servers could handle the full stack of product development.

Not "full stack" as in frontend and backend. Full stack as in: everything from identifying the opportunity to reaching customers.

Here's what the AI handled:

Market research & pain point identification. Using web scraping MCP servers to analyze competitor positioning, pricing models, and gaps in the market. Finding conversations on Reddit, support forums, and Hacker News where people expressed frustration with current analytics solutions.

User intent analysis. Scraping trending AI search data to understand what questions people were actually asking. What problems they were trying to solve. Where the real demand was.

GTM strategy. Generating positioning frameworks, target audience definitions, and channel prioritization based on the research data.

Programmatic content generation. Creating articles derived from trending AI search data, Reddit discussions, and support forum patterns. Not generic SEO content—content that addresses specific questions real people are asking.

What I still did manually

Let's be honest about the human-in-the-loop parts:

- Prompting. The AI doesn't know what questions to ask itself. That's still the job.

- Product screenshots. Visual assets still require human judgment.

- Service configuration. Signing up for Supabase, Resend, and other services. Setting up environments. The boring plumbing.

- Product Hunt launch. Writing the launch copy, timing the submission, responding to comments.

- Some testing. Though most was automated, edge cases still needed human eyes.

The prompting part is key. Knowing what to ask—and how to chain prompts together across different MCP servers—is the skill that makes this work. The AI doesn't generate strategy on its own. It executes strategy you've defined through carefully structured prompts.

The MCP server stack that made this possible

The Model Context Protocol is what turned this from theory into practice. Here's the architecture:

Research layer:

- Bright Data MCP for scraping competitor sites, forums, and search results

- Web search MCP for gathering real-time market data

- Reddit and forum analysis for pain point extraction

Analysis layer:

- Data processing pipelines for aggregating insights

- Trend identification from AI search patterns

- Sentiment analysis on user discussions

Execution layer:

- Content generation based on research outputs

- SEO/GEO optimization informed by search intent data and Superlines data

- Automated testing for core functionality

The beauty of MCP is that these servers communicate. Data flows from research to analysis to execution without manual handoffs. The AI agent orchestrates the entire pipeline.

Results: International users in week one

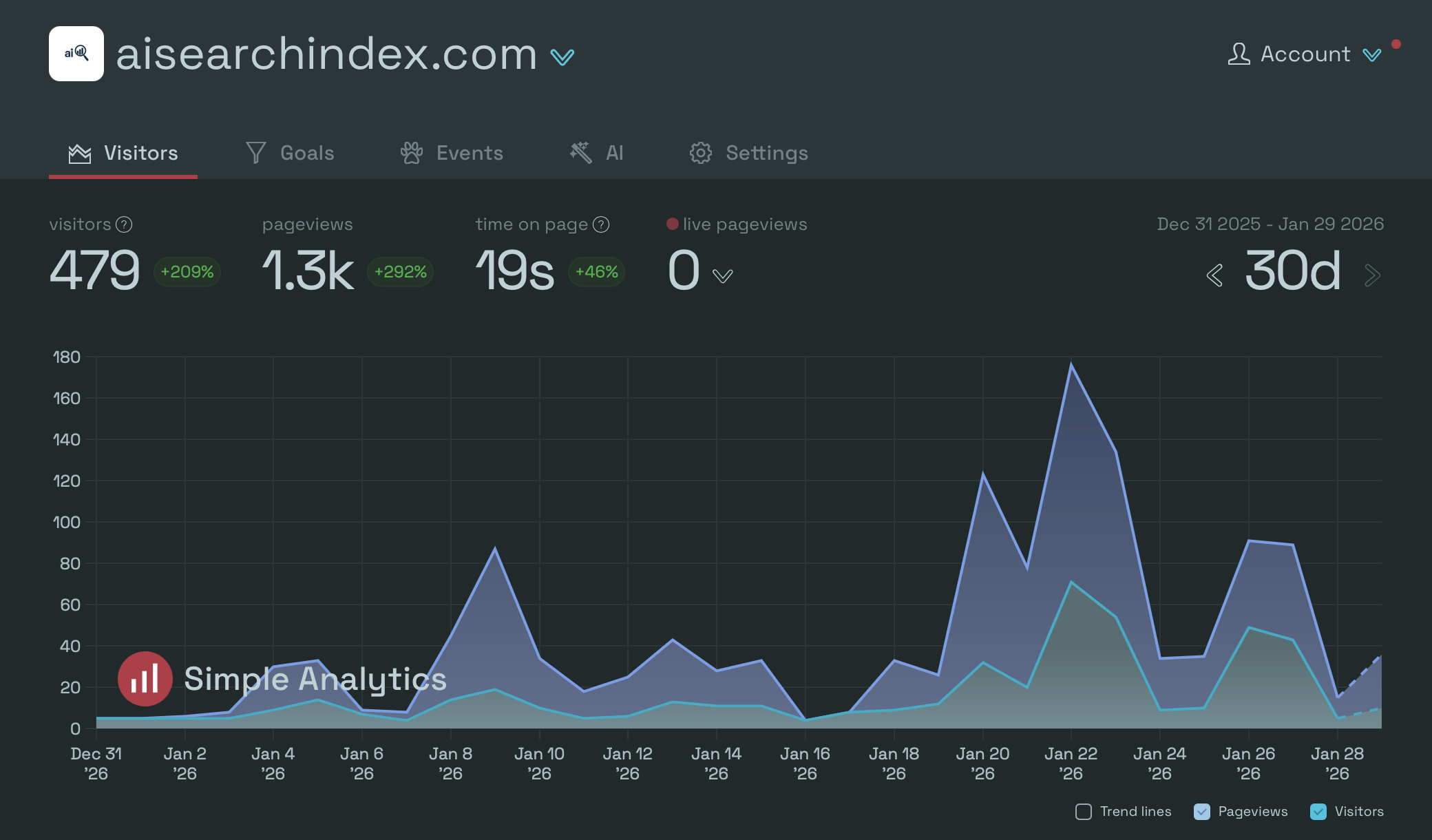

AI Search Index launched on Product Hunt last week. The results:

- International users from day one

- Organic traffic from both Google and LLMs (yes, AI agents are now referring users to us—which is fitting, given what the product does)

- 500+ active users tracking their AI bot traffic

- 10,000+ URLs analyzed through the platform

The product tracks AI crawler traffic that traditional analytics miss. GPTBot, PerplexityBot, ClaudeBot, and 60+ other AI agents that visit websites daily. It's a product for the agentic web—built using the agentic web.

What this changes about product development

Here's the insight that's harder to see: AI isn't just compressing the implementation phase. It's compressing the entire discovery-to-delivery cycle.

Before AI full-stack:

- Spend weeks on market research

- Spend days synthesizing insights

- Define product requirements

- Build the product

- Create marketing content

- Launch and iterate

With AI full-stack:

- Run research agents overnight

- Get synthesized insights by morning

- Generate product specs from research

- Build with AI-assisted coding

- Generate content programmatically

- Launch and iterate with AI-powered analytics

The compression isn't 10x on any single step. It's 3-5x on every step, which compounds to something that feels almost unfair.

The skill that matters now

A recent post on Dev.to captures this shift: developers are using AI agents + MCP to fully automate content workflows. Figma's team wrote about how MCP servers are becoming the unlock for design systems. Microsoft published their list of 10 MCP servers for development workflows.

The pattern is clear. The most productive builders aren't just using AI to write code. They're using AI to:

- Research what to build

- Analyze who needs it

- Generate content that reaches them

- Test whether it works

- Iterate based on data

The skill that matters isn't "prompt engineering" in the narrow sense. It's knowing how to design systems of prompts that accomplish business objectives. It's understanding which MCP servers to chain together. It's having the product judgment to know what questions to ask in the first place.

The AI full-stack company isn't about replacing humans

There's a tendency to frame this as automation replacing human work. That's not what happened here.

What happened: human judgment got amplified. Instead of spending time on data collection and synthesis, I spent time on decisions. Which opportunity to pursue. What positioning to take. When to ship.

The AI handled the grunt work. But the strategic choices? Those are still human.

That's what "AI full-stack company" actually means. Not "AI does everything." It means AI handles execution across the entire stack, while humans provide direction.

What this means for builders

If you're still just using AI for code completion, you're leaving most of the value on the table.

Here's a starter framework:

- Map your product lifecycle. What are the steps from idea to customer?

- Identify the research-heavy steps. Where do you spend time gathering and synthesizing information?

- Find MCP servers for those steps. Web scraping. Search. Data analysis. Content generation.

- Design prompt chains. How does output from one step become input for the next?

- Run the pipeline. Let it execute overnight while you sleep.

The builders who figure this out first have a structural advantage. Not because the technology is secret—it's all out there. But because the skill of orchestrating AI across the full product stack is still rare.

The takeaway

We talk a lot about AI changing how we build and code.

But it's also changing how we understand what to build. And how to get it to the people who need it.

AI Search Index isn't just a product built with AI. It's a proof point that the entire product lifecycle—from research to launch—can be AI-augmented.

That's what being an AI full-stack company means.

Try AI Search Index: Track the AI agents that visit your website at aisearchindex.com. Setup takes 2 minutes with one line of code.

Discussion questions:

- What percentage of your product lifecycle is currently AI-assisted?

- Which non-coding steps would benefit most from AI augmentation?

- What's holding you back from using MCP servers for research and GTM?